Learning Nonprehensile Dynamic Manipulation:

Sim2real Vision-based Policy with a Surgical Robot

Radian Gondokaryono, Mustafa Haiderbhai, Sai Aneesh Suryadevara, Lueder A. Kahrs,

Medical Computer Vision and Robotics Lab, The Wilfred and Joyce Posluns CIGITI @ SickKids, University of Toronto

Abstract

Surgical tasks such as tissue retraction, tissue exposure, and needle suturing remain challenging in autonomous surgical robotics. One challenge in these tasks is nonprehensile manipulation such as pushing tissue, pressing cloth, and needle threading.

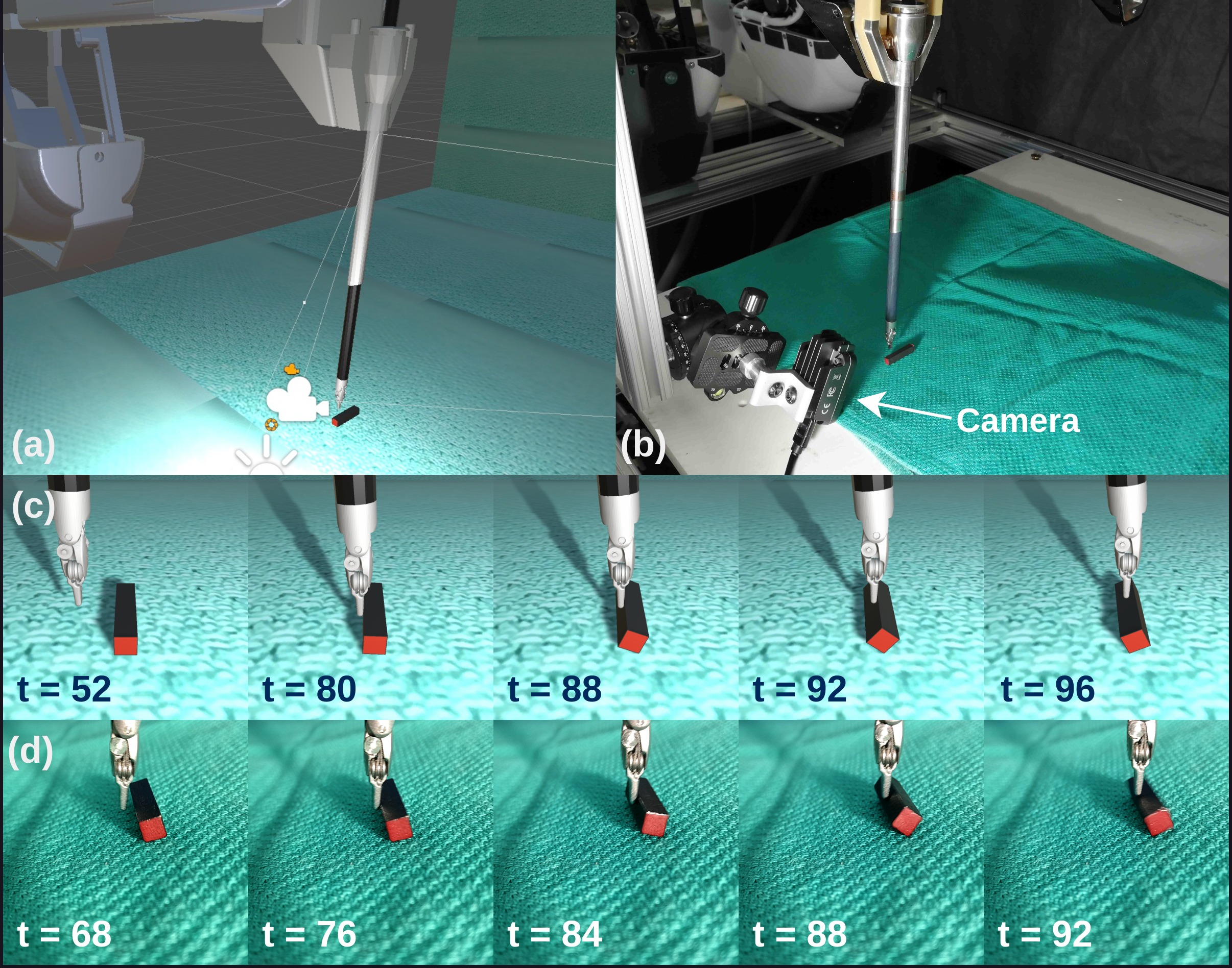

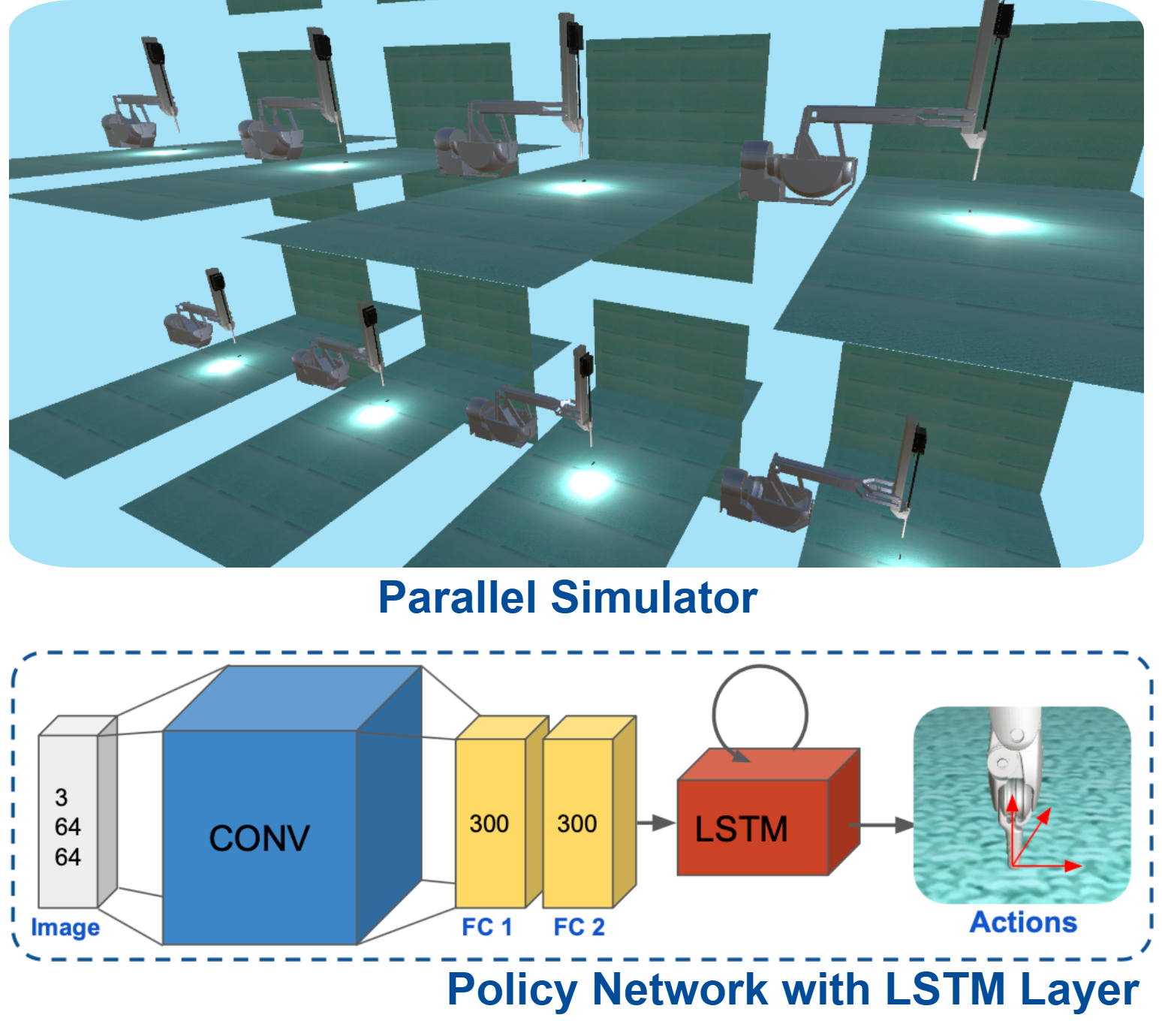

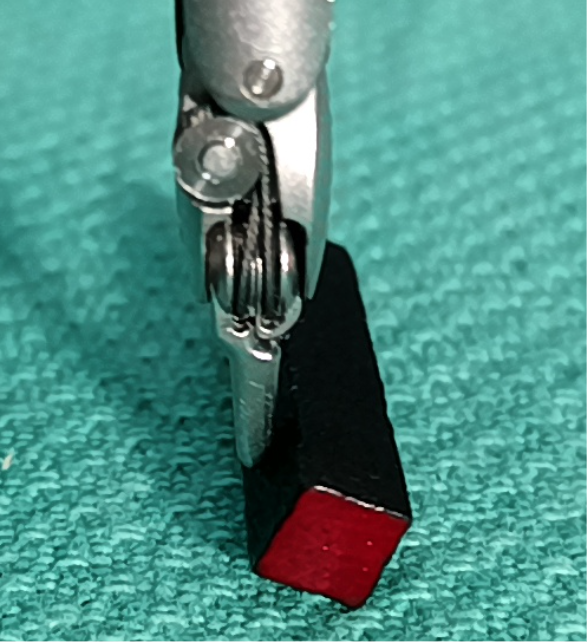

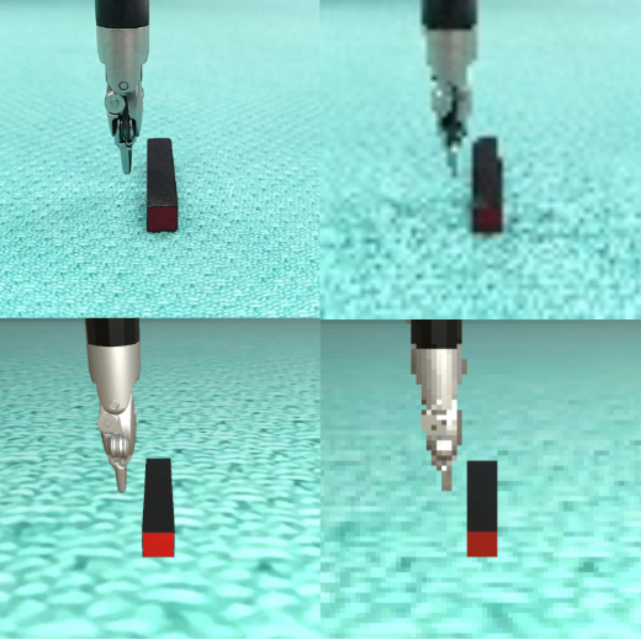

In this work, we isolate the problem of nonprehensile manipulation by implementing a vision-based reinforcement learning agent for rolling a block, a task that has complex dynamics interactions, small scale objects, and a narrow field of view. We train agents in simulation with a reward formulation that encourages efficient and safe learning, domain randomization that allows for robust sim2real transfer, and a recurrent memory layer that enables reasoning about randomized dynamics parameters.

We successfully transfer our agents from simulation to real and show robust execution of our vision-based policy with a 96.3\% success rate. We analyze and discuss the success rate, trajectories, and recovery behaviours for various models that are either using the recurrent memory layer or are trained with a difficult physics environment.

Surgical Tasks to an Isolated Dynamics Task

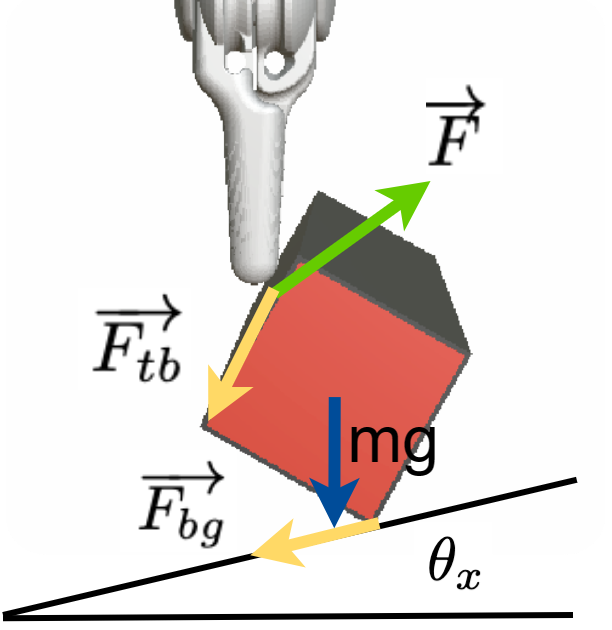

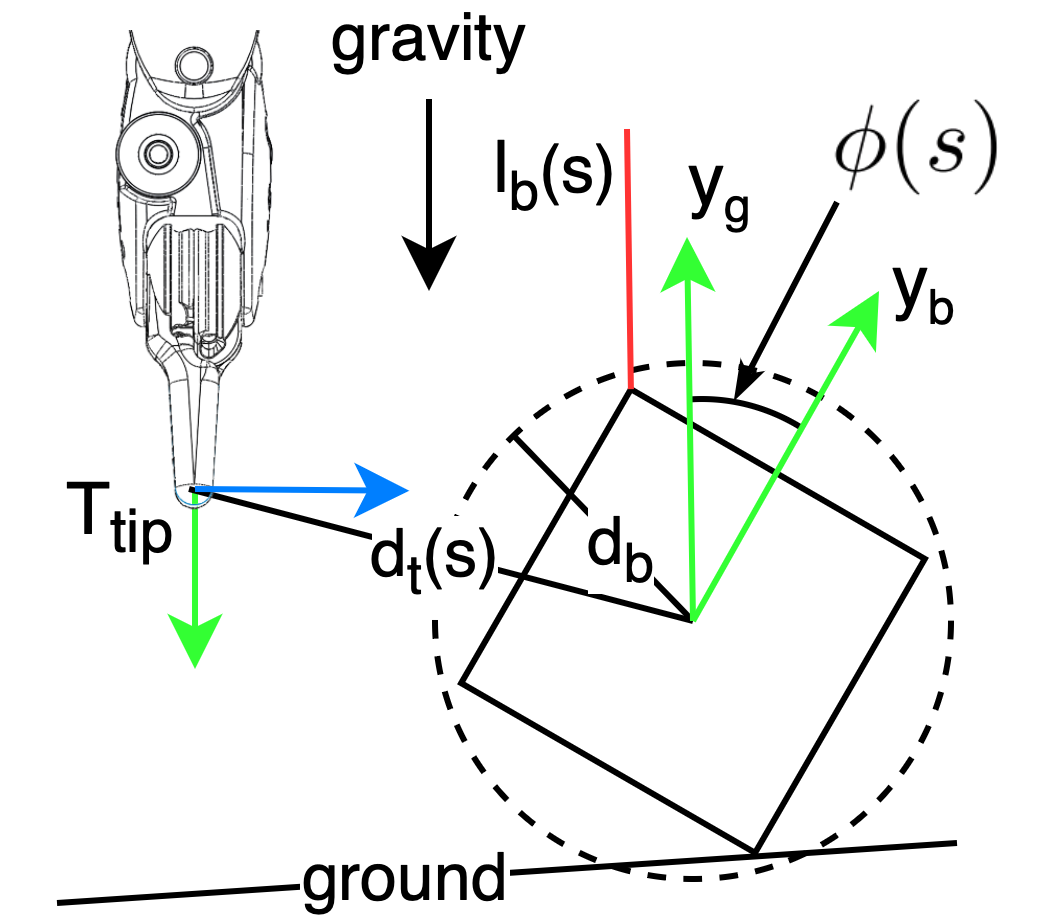

Understanding environment dynamics is key to success in a surgical task with soft body deformations. We define a task to roll a block to isolate the problem of environment dynamics that are unobservable from the image input of an autonomous agent.

Tissue Manipulation

Soft Material Cutting

Roll Dynamic Task

Environment Forces

Overview of Methods

Visual Estimation

Dynamics Estimation

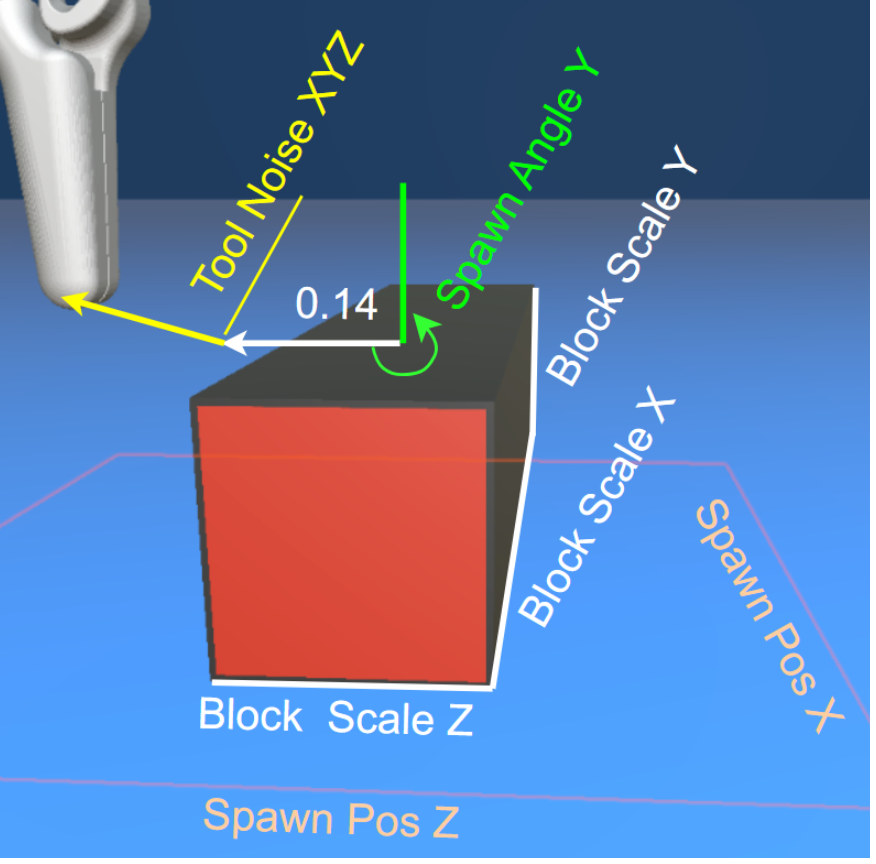

Unity Simulation

Difficult Physics

Reward Function

Domain Randomization

Results on the Real Setup

Results of the learned policy with LSTM layer, trained on inclination randomization, tested on the real setup with three different inclines 0, 10, and 15 degrees. The model achieved on average 96.3% success rate of executing a minimal of 1 roll on a trial. With 9 different starting positions, a total of 135 trials were conducted.

Incline 0

Incline 10

Incline 15

Observed Behaviours

One Motion Multiple Rolls

Long Slide on Incline

Failure Fall then Recover

Untrained Miniature Block

Failure Push

Failure Unroll

Failure Backflip

Hitting the Ground

Conclusion and Future Works

In this work, we explore autonomy of a fine manipulation task: Rolling a miniature block with a surgical robot. We train a robust vision-based policy in simulation and sim2real transfer our agents to a real setup. Our results show that we can achieve 96.3\% (up to 100\%) sim2real success, robustly manipulating the block to roll. Our work showcases the importance of Domain Randomization for successful sim2real transfer, highlighting the special importance of simulating the physical behavior of rolling as well as inclination randomization and how it can be used to increase the robustness of the roll policy. We experiment between fully connected networks and LSTM layers, and find that LSTMs perform consistently better both in simulation and the real setup due to the ability to reason about randomized dynamic parameters which are unobservable to the input image.

In the future, separate analysis of perception and control seems necessary, to isolate if certain failures are related to visual or dynamic parameter differences. One of the main limitations of our work is the need to manually estimate the visual and dynamical properties of the scene, which we hope to find alternatives for in the future to automate the process. The block rolling task proves that the dVRK surgical robot is capable of fine manipulation tasks with visuomotor policies that reasons about environment dynamics. Furthermore, this task is also a stepping stone for further autonomous surgical tasks and not intended for use in a surgical intervention.

BibTeX Citation

@article{gondokaryono2023learning, author={Gondokaryono, Radian and Haiderbhai, Mustafa and Suryadevara, Sai Aneesh and Kahrs, Lueder A.}, journal={IEEE Robotics and Automation Letters}, title={Learning Nonprehensile Dynamic Manipulation: Sim2real Vision-Based Policy With a Surgical Robot}, year={2023}, volume={8}, number={10}, pages={6763-6770}, doi={10.1109/LRA.2023.3312038}}